[AINews] Mixtral 8x22B Instruct sparks efficiency memes • ButtondownTwitterTwitter

Chapters

AI Discord Recap

AI Discords Highlights and Discussions

HuggingFace Discord

AI Discord Community Highlights

Handling Training Issues and Model Hosting

LM Studio Discord Updates

Model Comparisons and Misadventures

WizardLM-2 Performance Update and OpenRouter Discussion

Updates and Discussions on PyTorch and CUDA in Discord

AI Discussions and Developments

Cool Finds in HuggingFace

Axolotl-help-bot

OpenInterpreter Chat Discussions

Community Engagement and Requests for Clarifications

Text Data, Multimodal Data, and Tokenization

AI Discord Recap

Stable Diffusion 3 and Stable Diffusion 3 Turbo Launches: Stability AI introduced Stable Diffusion 3 and its faster variant Stable Diffusion 3 Turbo, claiming superior performance over DALL-E 3 and Midjourney v6. The models use the new Multimodal Diffusion Transformer (MMDiT) architecture. Plans to release SD3 weights for self-hosting with a Stability AI Membership, continuing their open generative AI approach. Community awaits licensing clarification on personal vs commercial use of SD3.

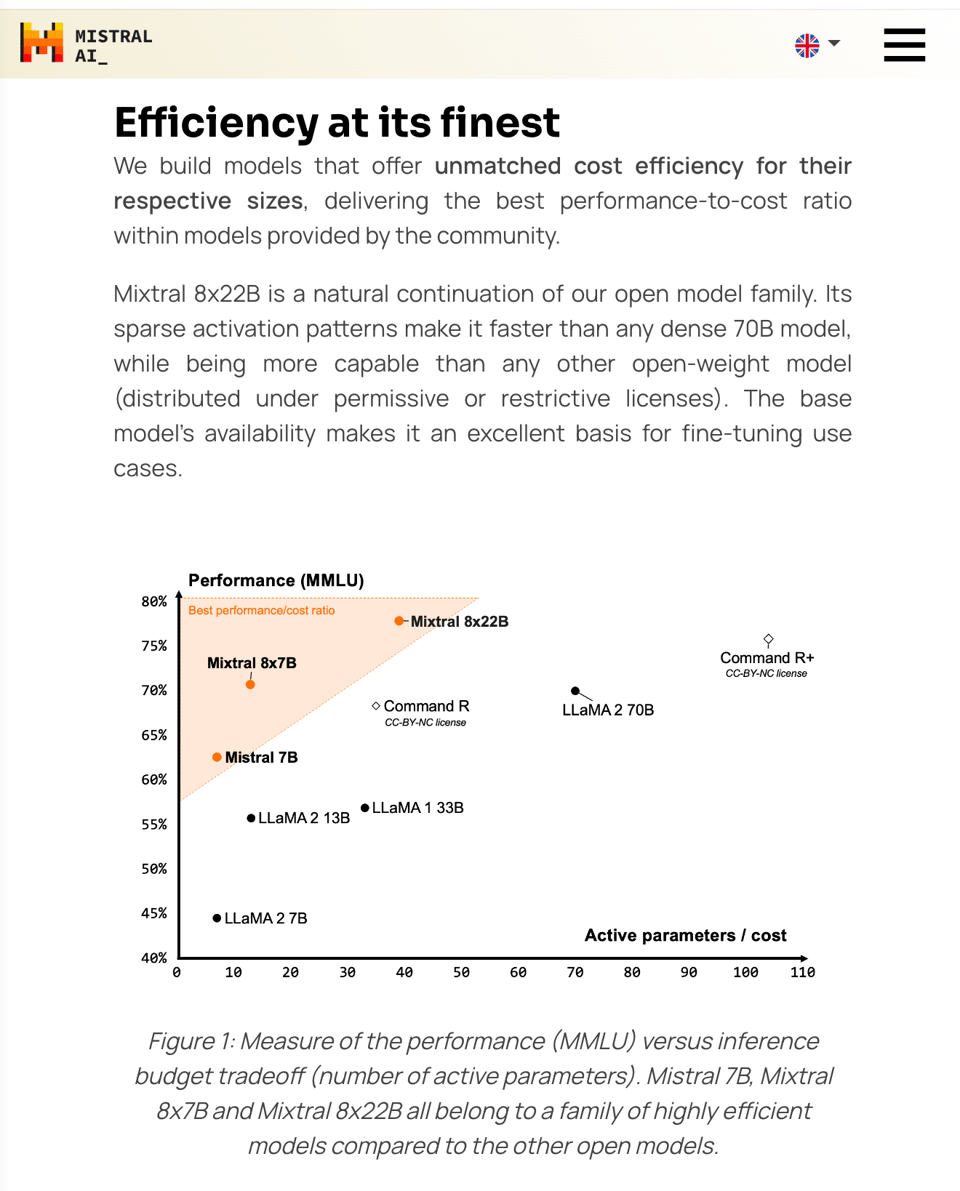

Unsloth AI Developments: Discussions on GPT-4 as a fine-tuned iteration over GPT-3.5, and the impressive multilingual capabilities of Mistral7B. Excitement around the open-source release of Mixtral 8x22B under Apache 2.0, with strengths in multilingual fluency and long context windows. Interest in contributing to Unsloth AI's documentation and considering donations to support its development.

WizardLM-2 Unveiling and Subsequent Takedown: Microsoft announced the WizardLM-2 family, including 8x22B, 70B, and 7B models, demonstrating competitive performance. However, WizardLM-2 was unpublished due to lack of compliance review, not toxicity concerns as initially speculated. Confusion and discussions around the takedown, with some users expressing interest in obtaining the original version.

Stable Diffusion 3 Launches with Improved Performance: Stability AI has released Stable Diffusion 3 and Stable Diffusion 3 Turbo, now available on their Developer Platform API, boasting the fastest and most reliable performance. The community awaits clarification on the Stability AI Membership model for self-hosting SD3 weights. Meanwhile, SDXL finetunes have made SDXL refiners nearly obsolete, and users discuss model merging challenges in ComfyUI and limitations of the diffusers pipeline.

WizardLM-2 Debuts Amidst Excitement and Uncertainty: The release of WizardLM-2 models by Microsoft has sparked enthusiasm for their potential GPT-4-like capabilities in an open-source format. However, the sudden takedown of the models due to a missed compliance review has led to confusion and speculation. Users compare the performance of WizardLM-2 variants and share tips for resolving compatibility issues in LM Studio.

Multimodal Models Advance with Idefics2 and Reka Core: Hugging Face's Idefics2 8B and Reka Core have emerged as powerful multimodal language models, showcasing impressive capabilities in visual question answering, document retrieval, and coding. The upcoming chat-focused variant of Idefics2 and Reka Core's competitive performance against industry giants have generated significant interest. Discussions also revolve around the cost-efficiency of models like JetMoE-8B and the launch of Snowflake's Arctic embed family for text-embedding.

AI Discords Highlights and Discussions

The various AI Discord channels are buzzing with discussions and revelations. Engineers and enthusiasts are actively engaging in conversations ranging from code translation and combating repetitive AI responses to performance upgrades and model disappearances. Each Discord channel offers unique insights and challenges, from AI safety debates to advancements in AI models. These discussions encompass a wide array of topics such as model preferences, legal implications of AI technology, and optimization techniques. Join the discourse on Discord to stay updated with the latest trends and developments in the field of artificial intelligence.

HuggingFace Discord

IDEFICS-2 Takes the Limelight:

- The release of IDEFICS-2 introduces an impressive model with 8B parameters, excelling in tasks like image processing, visual question-answering, and document retrieval. An upcoming chat-focused variant of IDEFICS-2 is anticipated.

Knowledge Graphs Meet Chatbots:

- A blog post explores integrating Knowledge Graphs with chatbots to enhance performance, inviting exploration for those interested in advanced chatbot functionality.

Snowflake's Arctic Expedition:

- Snowflake launches the Arctic embed family of models, setting new benchmarks in text-embedding model performance. They also introduce a Splatter Image space and discuss Multi-Modal RAG fusion.

Model Training and Comparisons Drive Innovation:

- An IP-Adapter Playground is unveiled to facilitate text-to-image interactions. New options like 'push_to_hub' in the transformers library's pipelines are introduced for enhanced convenience in comparing image captioning models.

AI Discord Community Highlights

The section highlights various discussions from different AI-related Discord channels. In the <a href='https://discord.com/channels/823971286308356157?utm_source=ainews&utm_medium=email&utm_campaign=ainews-mixtral-8x22b-instruct-defines-frontier' target='_blank'>Datasette - LLM (@SimonW)</a> Discord, there are updates on model testing and reported installation errors. The <a href='https://discord.com/channels/1087862276448595968?utm_source=ainews&utm_medium=email&utm_campaign=ainews-mixtral-8x22b-instruct-defines-frontier' target='_blank'>Alignment Lab AI</a> Discord mentions legal speculations and issues with 'wizardlm-2'. In the <a href='https://discord.com/channels/1089876418936180786?utm_source=ainews&utm_medium=email&utm_campaign=ainews-mixtral-8x22b-instruct-defines-frontier' target='_blank'>Mozilla AI</a> Discord, topics range from script updates to security vulnerability reporting procedures. The <a href='https://discord.com/channels/1002292111942635562/1002292398703001601/1230162110596649011?utm_source=ainews&utm_medium=email&utm_campaign=ainews-mixtral-8x22b-instruct-defines-frontier' target='_blank'>Stability.ai (Stable Diffusion)</a> Discord discusses new models, research findings, and technical advancements in Stable Diffusion 3, while also addressing concerns about licensing and model availability. The <a href='https://discord.com/channels/1179035537009545276/1179035537529643040/1229696690315989032?utm_source=ainews&utm_medium=email&utm_campaign=ainews-mixtral-8x22b-instruct-defines-frontier' target='_blank'>Unsloth AI (Daniel Han)</a> Discord delves into topics such as model distinctions, multilingual capabilities, and mobile deployment challenges.

Handling Training Issues and Model Hosting

The section discusses advice for handling different alpha values during training and addressing potential loss spikes. Solutions such as adjusting r and alpha values and disabling packing were suggested. Another topic covered is the possibility of training embedding tokens that were not trained in the Mistral model during fine-tuning. Additionally, questions arose about saving and hosting finetuned models in various formats using commands like save_pretrained_merged and save_pretrained_gguf. There were queries about the sequential functioning of these commands and the need to start with fp16 first. Lastly, there was a discussion on hosting a model with GGUF files on the HuggingFace inference API.

LM Studio Discord Updates

- Model Loading Error in Action: Users experienced issues loading the Wizard LLM 2 model in LM Studio, with a fix suggested involving the use of GGUF quants and version 0.2.19 for proper functionality.

- Cleaning Up LM Studio: Recommendations were shared for deleting specific folders in LM Studio to address issues, along with the importance of backing up data.

- GPU Communication Breakthrough: A Reddit post celebrated successful direct GPU communication, potentially improving performance.

- Customizing GPU Load: Insights were provided on adjusting GPU offload in LM Studio's Linux beta.

- Discussion on Hugging Face Models: Users sought assistance in running specific Hugging Face models in LM Studio.

- Seeking Affiliate Marketing Partner: A user expressed interest in partnering with a coding expert for affiliate marketing campaigns.

- WaveCoder Ultra Unveiled: Microsoft released WaveCoder Ultra 6.7b specializing in code translation.

- AI Script Writing Inquiry: Members sought advice on generating full scripts with AI's help.

- Model Performance on Different Hardware: Discussions on model performance on different hardware setups like MacBook Pro with M3 chip.

- Curiosity about Windows Executable Signing: Members discussed Windows executable signing and challenges with code signing certificates.

Model Comparisons and Misadventures

Discussions revolve around the performance of various AI models including GPT-4, Claude, and Mistral. Users share experiences suggesting that newer versions at times seem lazier or less capable of managing extensive context, while others note the usefulness of models like Claude 3 Opus for technical issues. There's also mentions of Mixtral's 8x22B model being impressive for an open-source release.

WizardLM-2 Performance Update and OpenRouter Discussion

The WizardLM-2 model is now processing over 100 transactions per second following a complete DB restart. Performance issues are being tackled by the team, with updates available on Discord. Additionally, the OpenRouter channel on Discord featured discussions on AI frontend projects seeking development support, distinguishing AI text, and improving sidebar and modal systems. The channel also delved into topics like censorship layers, multilingual capacity of AI models, server issues, and the impact of decoding algorithms on AI model quality. New additions and deployments of AI models were mentioned, including Mistral 8x22B Instruct. Links to relevant discussions and resources were provided throughout the section.

Updates and Discussions on PyTorch and CUDA in Discord

- PyTorch Evolution and New Edition Tease: Discussions confirmed that while 'Deep Learning with PyTorch' is a good starting point despite being 4 years old, a new edition is in progress which will not cover topics like transformers and LLMs. Part III on deployment is considered outdated.

- Anticipating Blog Content: A member mentioned the possibility of creating a blog post from a draft chapter on attention/transformers.

- CUDA Discussions: Members discussed topics such as accelerated matrix operations in CUDA, challenges with JIT compilation, block size parameters exploration, data reading for CUDA C++, and speculations on CUDA cores and thread dispatch.

- RingAttention Working Group Concerns: Discussions revolved around a member stepping back from the RingAttention project due to time constraints.

- Quandaries and Optimizations in HQQ: Conversations delved into quantization axes, speed versus quality compromises in Half-Quadratic Quantization, further optimizations using Triton kernels, exploration of extended capabilities, and bench..

AI Discussions and Developments

In this section, various discussions and developments related to AI are highlighted. Topics include debates on binary versus non-binary thinking, suitable AI models for writing literature reviews, and challenges navigating AI-related complications like account terminations and policy violations. The section also covers insights shared in different Discord channels on topics such as GPT models, constructing hierarchical structures within LlamaIndex, and advancements in transformer architectures. Links to relevant resources and publications are also provided throughout.

Cool Finds in HuggingFace

Splatter Art with Speed

The Splatter Image space on HuggingFace is a quick tool to generate splatter art.

Diving into Multi-Modal RAG

A speaker from LlamaIndex shared resources about Multi-Modal RAG (Retrieval Augmented Generation), showcasing applications that combine language and images. Discover how RAG's indexing, retrieval, and synthesis processes can integrate with the image setting in their documentation.

LLM User Analytics Unveiled

Nebuly introduced an LLM user analytics playground that's accessible without any login, providing a place to explore analytics tools. Feedback is requested for their platform.

ML Expanding into New Frontiers

The IEEE paper highlights an interesting scenario where Machine Learning (ML) can be widely applied. The paper can be found at the IEEE Xplore digital library.

Snowflake Introduces Top Text-Embedding Model

Snowflake launched the Arctic embed family of models, claiming to be the world’s best practical text-embedding model for retrieval use cases. The family of models surpasses others in average retrieval performance and is open-sourced under an Apache 2.0 license, available on Hugging Face and soon in Snowflake's own ecosystem. Read more in their blog post.

Multi-Step Tools Enhancing Efficiency

An article on Medium discusses how multi-step tools developed by LangChain and Cohere can unlock efficiency improvements in various applications. The full discourse is available in the provided Medium article.

Axolotl-help-bot

Simplifying Epoch-wise Model Saving:

- A member inquired about configuring Axolotl to save a model only at the end of training and not every epoch. The solution involved adjusting

save_strategyin the training arguments to"no"and implementing a custom callback for a manual save upon training completion.

Choosing a Starter Model for Fine-Tuning:

- When asked for a suitable small model for fine-tuning, "TinyLlama-1.1B-Chat-v1.0" was recommended due to its manageability for quick experiments. Members were guided to the Axolotl repository for example configurations like

pretrain.yml.

Guidance on Axolotl Usage and Data Formatting:

- There was a discussion on concepts like

model_type,tokenizer_type, and how to format datasets for Axolotl training, particularly in relation to using the "TinyLlama-1.1B-Chat-v1.0" model. For the task of text-to-color code generation, it was suggested to structure the dataset without "system" prompts and upload it as a Hugging Face dataset if not already available.

CSV Structure Clarification for Dataset Upload:

- Clarification was sought on whether a one-column CSV format is needed for uploading a dataset to Hugging Face for use with Axolotl. The formatted examples should be line-separated, with each line containing the input and output structured as per model requirements.

Posting Inappropriate Content:

- A user posted a message promoting unauthorized content, which is not relevant to the technology-oriented discussion of the channel nor adhere to community guidelines.

OpenInterpreter Chat Discussions

OpenAccess AI Collective (axolotl)

- A member inquired about preprocessing data for fine-tuning the TinyLlama model to predict color codes from descriptions.

- Guidance was provided on preparing the dataset for fine-tuning TinyLlama.

- An off-topic message advertising OnlyFans leaks was posted.

Latent Space

- Detailed benchmarks of language models are available on llm.extractum.io.

- The Payman AI project was introduced, enabling AI agents to pay humans for tasks.

- Supabase introduced an API for running AI inference models.

- Speculations about the launch of Llama 3 are discussed.

- OpenAI announced updates to the Assistants API.

Latent Space (continued)

- Discussions on AI wearables versus smartphones and the need for deep contextual knowledge in AI assistants.

- Excitement for the WizardLm2 model and desires for personal AI assistants.

- Users shared struggles with software compatibility on Windows.

- AI hardware options and the importance of backend infrastructure are highlighted.

Interconnects

- Methods to improve winrate against different models were discussed.

- Reranking LLM outputs during inference was explored.

- A proposal for web page quality propagation to rank pages was suggested.

More Interconnects

- The Mixtral 8x22B model and its chatbot capabilities were discussed.

- OLMo 1.7 7B model's performance improvement was noted.

- Discussions on evaluating 'quality' content and the web graph from Common Crawl occurred.

Further Interconnects

- Challenges in replicating the Chinchilla scaling paper were discussed.

- Skepticism was expressed about the conclusions from scaling law papers.

Community Engagement and Requests for Clarifications

- Discord users are engaging with the Chinchilla paper issue, expressing concern and surprise. Some phrases like 'Chinchilla oops?' and 'oh no' were used to show discomfort.

- One replication attempter tried to clarify with the original authors but received no response, leading to community frustration.

Text Data, Multimodal Data, and Tokenization

A member discusses the gray area of scraped text data from an EU copyright perspective. Suggestions are made for sources of multimodal data with permissive licenses like Wikicommons and Creative Commons Search. An individual shares a Google Colab notebook on creating a Llama tokenizer without relying on HuggingFace. A member points out a misspelling in a shared tokenizer. A contributor mentions Mistral's tokenization library to aid in standardized fine-tuning processes. Discussions on decoding strategies for language models and the impact of min_p sampling on creative writing are also highlighted.

FAQ

Q: What is the Multimodal Diffusion Transformer architecture?

A: The Multimodal Diffusion Transformer (MMDiT) architecture is used in models like Stable Diffusion 3 and Stable Diffusion 3 Turbo, which are introduced by Stability AI. These models claim superior performance over DALL-E 3 and Midjourney v6.

Q: What are the key features of Stable Diffusion 3 and Stable Diffusion 3 Turbo?

A: Stable Diffusion 3 and its faster variant Stable Diffusion 3 Turbo are known for boasting fast and reliable performance, utilizing the Multimodal Diffusion Transformer architecture. SD3 weights are planned for self-hosting with a Stability AI Membership.

Q: What are the discussions surrounding the licensing clarification for personal vs commercial use of Stable Diffusion 3?

A: The AI community eagerly awaits clarification on the licensing terms for personal and commercial use of Stable Diffusion 3, as users are interested in understanding the implications of using SD3 for different purposes.

Q: What are some notable advancements in the AI models introduced by Unsloth AI?

A: Unsloth AI has introduced GPT-4, Mistral7B, and Mixtral 8x22B models, showcasing fine-tuned iterations with impressive multilingual capabilities. The open-source release of Mixtral 8x22B under the Apache 2.0 license has garnered attention for its strengths in multilingual fluency and long context windows.

Q: What led to the takedown of the WizardLM-2 models by Microsoft?

A: The WizardLM-2 models by Microsoft were unpublished not due to toxicity concerns but because of a lack of compliance review. This takedown has sparked confusion and discussions within the AI community, with some users expressing interest in obtaining the original version.

Q: What is the significance of Idefics2 and Reka Core in the realm of multimodal language models?

A: Idefics2 8B and Reka Core, developed by Hugging Face, have emerged as powerful multimodal language models excelling in tasks like visual question answering, document retrieval, and coding. With continued advancements and competitive performance, these models have generated significant interest in the AI community.

Q: What are the key highlights of Snowflake's Arctic embed family of models?

A: Snowflake has launched the Arctic embed family of models, claiming to be the world's best practical text-embedding model for retrieval use cases. These models surpass others in average retrieval performance, are open-sourced under an Apache 2.0 license, and are available on platforms like Hugging Face.

Q: How do the AI Discord channels contribute to discussions within the AI community?

A: The AI Discord channels serve as hubs for conversations ranging from code translation and AI responses to model performance upgrades and disappearances. They offer insights into AI safety debates, advancements in AI models, and various technical challenges faced by engineers and enthusiasts in the field.

Q: What are some recent trends in AI model development and deployment discussed in the Discord channels?

A: Discussions in the AI Discord channels highlight trends like model preferences, legal implications of AI technology, optimization techniques, and the challenges of deploying and hosting AI models. The community actively engages in conversations on alpha values, loss spikes, and model merging challenges.

Q: What are some key topics of discussion within the AI Discord communities related to AI model performance and advancements?

A: AI enthusiasts engage in discussions on AI model performance on different hardware setups, evaluations of specific AI models like GPT-4 and Mistral, and challenges related to software compatibility on various platforms. Discussions also touch upon hardware options, backend infrastructure, and future developments in AI technologies.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!