[AINews] OpenAI takes on Gemini's Deep Research • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Deep Learning and AI Community Updates

Advanced Model Training and Optimization Techniques

DSPy Discussions and Updates

Using Ollama with Custom Models

Aider v0.73.0 Release and Updates

Yannick Kilcher Paper Discussion

Emerging Models and AI Discussions

OpenAI Discussions on GPT Models and Applications

Deep Research, SoftBank and OpenAI partnership, Crystal Intelligence model, LLM productivity impacts, Gemini Deep Research limitations

Interconnects: Memes, Ghosthunters, TechCrunch, RLHF, and Economic Charts

Eleuther ▷ #interpretability-general

Remote MCP Tools and Discord Confusion

Notebook LM Discord Use Cases

Email Alerts, Study Session, and Course Website Navigation

Axolotl AI ▷ #general (3 messages)

AI Twitter Recap

Advances in Reinforcement Learning (RL) and AI Research

-

Reinforcement Learning Simplified and Its Impact on AI: @andersonbcdefg changed his mind and now thinks that RL is easy, reflecting on the accessibility of RL techniques in AI research.

-

s1: Simple Test-Time Scaling in AI Models: @iScienceLuvr shared a paper on s1, demonstrating that training on only 1,000 samples with next-token prediction and controlling thinking duration via a simple test-time technique called budget forcing leads to a strong reasoning model. This model outperforms previous ones on competition math questions by up to 27%. Additional discussions can be found here and here.

-

RL Improves Models' Adaptability to New Tasks: Researchers from Google DeepMind, NYU, UC Berkeley, and HKU found that reinforcement learning improves models' adaptability to new, unseen task variations, while supervised fine-tuning leads to memorization but remains important for model stabilization.

-

Critique of DeepSeek r1 and Introduction of s1: @Muennighoff introduced s1, which reproduces o1-preview scaling and performance with just 1K high-quality examples and a simple test-time intervention, addressing the data intensity of DeepSeek r1.

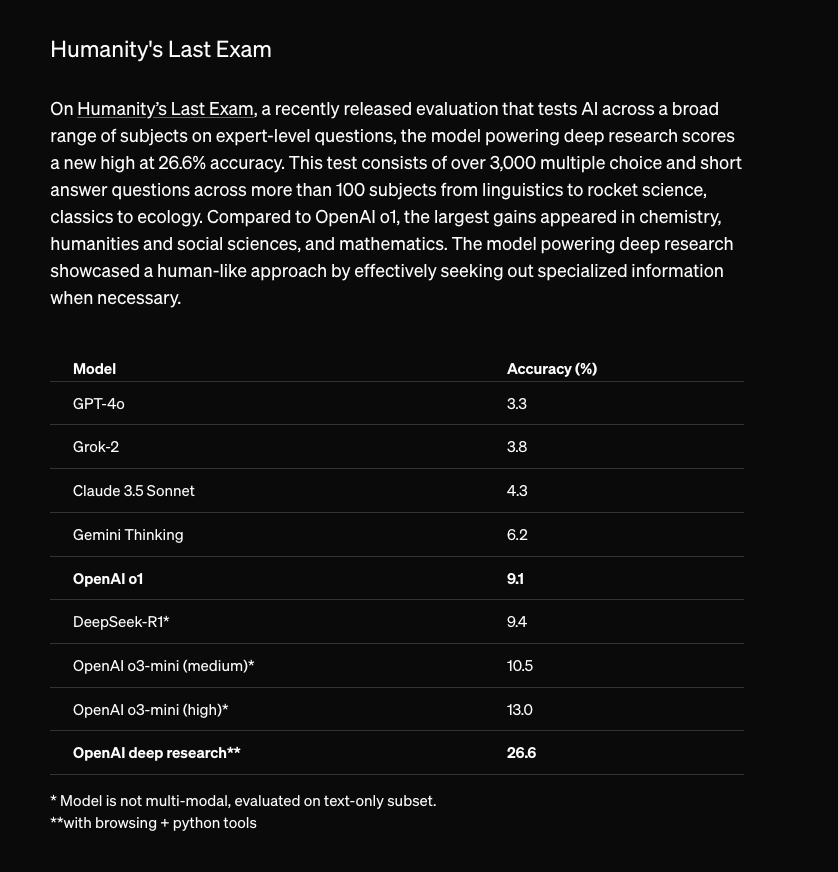

OpenAI's Deep Research and Reasoning Models

-

Launch of OpenAI's Deep Research Assistant: @OpenAI announced that Deep Research is now rolled out to all Pro users, offering a powerful AI tool for complex knowledge tasks. @nickaturley highlighted its general-purpose utility and potential to transform work, home, and school tasks.

-

Improvements in Test-Time Scaling Efficiency: @percyliang emphasized the importance of efficiency in test-time scaling with only 1K carefully chosen examples, encouraging methods that improve capabilities per budget.

-

First Glimpse of OpenAI's o3 Capabilities: @BorisMPower expressed excitement over what o3 is capable of, noting its potential to save money and reduce reliance on experts for analysis.

Developments in Qwen Models and AI Advancements

-

R1-V: Reinforcing Super Generalization in Vision Language Models: @_akhaliq shared the release of R1-V, demonstrating that a 2B model can outperform a 72B model in out-of-distribution tests within just 100 training steps. The model significantly improves performance in long contexts and key information retrieval.

-

Qwen2.5-Max's Strong Performance in Chatbot Arena: @Alibaba_Qwen announced that Qwen2.5-Max is now ranked #7 in the Chatbot Arena, surpassing DeepSeek V3, o1-mini, and Claude-3.5-Sonnet. It ranks 1st in math and coding, and 2nd in hard prompts.

-

s1 Model Exceeds o1-Preview: [@arankomatsuzaki highlighted that s1-32B, after supervised fine-tuning on **Q

Deep Learning and AI Community Updates

The AI Safety and Defending Against Jailbreaks section highlights Anthropic's efforts in preventing universal jailbreaks with Constitutional Classifiers, inviting users to test the safety techniques and discuss concerns about hallucinations in AI models. In the AI Tools and Platforms for Developers part, advancements in tools like SWE Arena for Vibe Coding, Perplexity AI Assistant, and Llama Development Tools are shared, showcasing new features and user experiences. Memes and humor add a lighthearted touch to the community discussions. Moving on to the AI Reddit Recap, the /r/LocalLlama section delves into themes like the paradigm shift in AI model hardware towards CPU+RAM, the rise of Mistral, Qwen, and DeepSeek outside the USA, the traction of Phi 4 Model on underserved hardware, and the competence of DeepSeek-R1 in complex problem solving. The Other AI Subreddit Recap explores topics like OpenAI's new hardware initiatives, critique on AI outperforming human expertise claims, and stability AI founder's perspective on machine intelligence. Lastly, the AI Discord Recap covers DeepSeek AI's ascendancy and regulatory scrutiny, OpenAI's o3-mini performance and public scrutiny, and the evolving landscape of AI tooling and IDEs.

Advanced Model Training and Optimization Techniques

Unsloth AI introduces dynamic quantization shrinking model sizes, while GRPO gains ground in reinforcement learning discussions. Test-time compute tactics like Budget Forcing emerge to enhance reasoning performance. Hardware hurdles are highlighted with RTX 5090 surpassing RTX 4090 in AI tasks, and AMD's RX 7900 XTX struggling with large language models. Shared memory hacks on GPUs boost LM Studio efficiency. The section also covers OpenAI's Deep Research Agent, legislative actions impacting AI regulation, and advancements in AI model training techniques like Self-Other Overlap fine-tuning and OpenEuroLLM launching EU-focused language models.

DSPy Discussions and Updates

Various discussions and updates related to DSPy were discussed in this section. DeepSeek's symbolic representation of hopes and fears about AI, challenges faced by SAEs in steering LLMs predictably, version 2.6 deprecating typed predictors, interest in mixing DSPy chain-of-thought with R1 models, and issues with streaming outputs in DSPy were highlighted.

Using Ollama with Custom Models

To utilize a fine-tuned Mistral model with Ollama, one can follow the Unsloth documentation which simplifies the process for local integration.

- Using the

FastLanguageModel.from_pretrainedmethod allows for conversion to 4-bit and saving with ease.

Aider v0.73.0 Release and Updates

The Aider v0.73.0 release introduces new features including full support for o3-mini and a --reasoning-effort argument with low, medium, and high options. Auto-creating parent directories when creating new files enhances file management. Improvements have been made to handle context window size limits effectively, avoiding user errors. Aider now supports free access to R1 on OpenRouter with the --model openrouter/deepseek/deepseek-r1:free command. Users can use the new model setting remove_reasoning: tagname to remove model-specific reasoning tags from responses. The release notes highlight that Aider wrote 69% of the code in this version, emphasizing internal development based on user feedback and requirements.

Yannick Kilcher Paper Discussion

LLMs struggle with math:

A member likened LLMs and reinforcement learning to trying to brush teeth with a fork, indicating a fundamental mismatch for mathematical tasks. Further discussion highlighted that models like o1 & r1 scored differently on math problems with some members deeming OpenAI's models inferior.

- One user stated that o3-mini performed well in mathematical reasoning, solving difficult puzzles better than others, which spurred interest in mathematical reasoning competitions.

SOO fine-tuning aims for honest AI:

A paper presented discussed Self-Other Overlap (SOO) fine-tuning in AI Safety, aimed at improving honesty by aligning AI's self-representation with perceptions of others. It reported significant reductions in deceptive responses across various model sizes without harming overall task performance.

- Experiments showed that deceptive responses in Mistral-7B dropped to 17.2%, while other models also experienced similar reductions, underscoring the efficacy of SOO in reinforcement learning scenarios.

Critique of OpenAI's approach:

Concerns were raised about OpenAI's models, suggesting they may release products that appear compelling while concealing flaws. Discussion referenced Google's approach to engineering benchmarks through vast synthetic data, likening that method to lacking precision in math capabilities.

- A user sarcastically remarked on OpenAI's strategy as creating smoke and mirrors, referring specifically to past initiatives like Sora.

Emerging Models and AI Discussions

Emerging models: DeepSeek-R1 models achieve performance on par with OpenAI's models across various tasks, including math and reasoning. The team's approach contrasts with reinforcement learning, focusing on fine-tuned reasoning patterns for efficiency and effectiveness.

- AI Discussions enter a Marathon mode where discussions have become continuous and ongoing, indicating high engagement and enthusiasm within the community.

OpenAI Discussions on GPT Models and Applications

Members discussed various topics related to OpenAI and GPT models in this section:

- Limits of o3 models and user curiosity about different restrictions among the models.

- Frustrations over declining performance in models like O1 and O3 mini.

- Insights into the roles of different models, such as GPT-4o and O3-mini, for specific tasks like image questions and coding.

- User experience issues with ChatGPT and concerns about reliability.

- Interest in using AI for creating children's books and the creative applications of AI in literature.

Deep Research, SoftBank and OpenAI partnership, Crystal Intelligence model, LLM productivity impacts, Gemini Deep Research limitations

Deep Research Launch by OpenAI:

- OpenAI's new feature, Deep Research, allows users to interact with the O3 model, providing research question refinements and a sidebar displaying reasoning progress during query execution.

- Initial impressions highlight the potential of Deep Research in synthesizing information, though some users note limitations in thorough source analysis.

SoftBank's $3 Billion Commitment to OpenAI:

- SoftBank has announced plans to purchase $3 billion worth of OpenAI products annually, while establishing a joint venture focused on the Crystal Intelligence model in Japan.

- This exclusive offering will integrate OpenAI's technology into SoftBank subsidiaries and aims to enhance AI solutions for Japanese enterprises.

Launch of Crystal Intelligence Model:

- The Crystal Intelligence model is designed to autonomously analyze and optimize a company's legacy code over the past 30 years, with future plans to introduce AGI within two years.

- Masayoshi Son emphasized the transformative potential of AI, referring to it as Super Wisdom, in his remarks during the launch event.

Impact of LLMs on Productivity:

- Users have reported significant productivity boosts from LLMs, stating they can now complete tasks that would previously take days, highlighting a shift in software development capabilities.

- However, concerns about misinformation and limitations arise, particularly regarding reliance on algorithms and source quality in generated content.

Limitations of Gemini Deep Research:

- Users of Gemini Deep Research noted its tendency to produce summaries rather than synthesizing information from multiple sources, which limits its effectiveness.

- There are also concerns regarding the inclusion of low-quality content from SEO-focused pages during research processes.

Interconnects: Memes, Ghosthunters, TechCrunch, RLHF, and Economic Charts

- HF_ENABLE_FAST_TRANSFER boosts efficiency: A member highlighted using HF_ENABLE_FAST_TRANSFER, which reportedly triples the effectiveness of the HF ecosystem. Discussion ensued about the default transfer speeds of large file storage.

- Bengali Ghosthunters take center stage: Bengali Ghosthunters generated humor as a member recounted an experience where Gemini Flash Thinking became erratic while helping him learn about LLMs. The topic sparked further exploration in the connection between technology and humor.

- TechCrunch memes making waves: A hilarious reaction was captured as the headline from TechCrunch was praised with a member remarking on their ability to post memes on X like a champ.

- Estimated Economic Value charts cause a stir: A member noted excitement over new 'Estimated Economic Value' charts promising to intrigue fans of the ambiguous test time compute log plots.

- Debates on RLHF and reasoning models: A member asserted that RLHF continues to be vital despite the rise of reasoning models. This sparked a discussion emphasizing both are components of a larger post-training strategy.

Eleuther ▷ #interpretability-general

New paper by David Chalmers, Crosscoder repositories, Sparse autoencoders optimization, Expert evaluation in MoE models

-

Chalmers on Propositional Attitudes: A new paper by David Chalmers argues that extracting propositional attitudes from AI is more impactful than pursuing mechanistic understanding. Chalmers also cited a previously published paper from the team, expressing gratitude for the reference.

-

Crosscoder Repositories Discussion: A member inquired about open source repositories for training and using crosscoders, highlighting the ongoing challenge of reproducibility in the field. Another member shared a GitHub link to dictionary_learning as a potential resource.

-

Sparse Autoencoders and Optimization Scalability: There was a discussion about the challenges of sparse recovery, suggesting that the search for the right representation typically requires iterative methods. It was pointed out that the scale required for effective analysis might render these methods infeasible in practice.

-

Evaluating Experts in Mixture of Experts (MoE): Discussion centered on identifying the most active experts in a DeepMind code contests dataset, with frequency and weight data provided for several experts. The top experts were highlighted, and it was noted that their performance assessments might help inform pruning strategies in MoE models.

-

Cosine Similarity vs Euclidean Distance in Expert Weights: Clarification was given that the distance metric used for the analysis of expert distributions was euclidean, rather than cosine similarity as initially assumed. Cosine similarity was actually referenced as a derived metric based on expert distribution vectors aggregated by the MoE gating module.

Remote MCP Tools and Discord Confusion

Members engaged in discussions about the need for remote capabilities in MCP tools, highlighting the focus on local implementations. Concerns were raised about the scalability and usability of current MCP setups, prompting suggestions to explore alternative setups. Additionally, confusion arose over two similar Discord servers, with clarifications made regarding their official status and management by non-Anthropic users. The community also delved into topics such as Superinterface products, load balancing using Litellm Proxy, and the preference for open-source tools over proprietary options.

Notebook LM Discord Use Cases

Introduced in this section are various use cases discussed by members in the Notebook LM Discord channel. From integrating complete tutorials for learning to exploring the usage of Notebook LM in BPO settings, the community expresses interest in the diverse applications of this tool. One user details their experience of using Notebook LM to learn Japanese by analyzing video transcripts. Additionally, the launch of a comical and deep podcast named 'Roast or Toast' is announced, inviting listeners to tune in for an interesting exploration of profound topics with a unique format.

Email Alerts, Study Session, and Course Website Navigation

Quizzes and study materials related to reasoning techniques are prominently featured in this chunk. Members highlighted the availability of quizzes on the course website and the absence of email alerts for new quizzes. The course team emphasizes timely releases of quizzes and answer keys to avoid cluttering inboxes. A study session focusing on reasoning techniques is set to commence shortly, providing an opportunity for members to enhance their understanding. Direct links for easy access to quizzes on the course website were also shared to facilitate efficient navigation.

Axolotl AI ▷ #general (3 messages)

Axolotl AI ▷ #general (3 messages):

-

Member unsure about fine-tuning reasoning models: A member expressed their confusion about how to fine-tune reasoning models, humorously admitting they don't even know where to start.

- Lol - it seems they are looking for guidance in a complex area.

-

Colab notebook shared for GRPO: Another member shared a Colab notebook for GRPO, providing a resource for those interested in the topic.

- This could be an excellent starting point for members wanting to learn more about GRPO specifically.

FAQ

Q: What is reinforcement learning and its impact on AI research?

A: Reinforcement learning is a technique where an agent learns to make decisions by trial and error to achieve a goal. Its impact on AI research includes improved adaptability to new tasks and enhanced reasoning models.

Q: What is the concept of test-time scaling in AI models?

A: Test-time scaling in AI models refers to controlling thinking duration by utilizing a technique like budget forcing, enabling strong reasoning models with improved performance using limited training samples.

Q: How does reinforcement learning affect models' adaptability to new tasks?

A: Reinforcement learning improves models' adaptability to new, unseen task variations compared to supervised fine-tuning, which can lead to better performance and model stabilization.

Q: What is the Self-Other Overlap fine-tuning approach in AI safety?

A: Self-Other Overlap (SOO) fine-tuning in AI safety aims at aligning an AI's self-representation with how others perceive it to promote honesty. This approach reduces deceptive responses across various model sizes without compromising overall task performance.

Q: How do fine-tuned reasoning patterns affect model efficiency and effectiveness?

A: Fine-tuned reasoning patterns, as seen in models like DeepSeek-R1, focus on efficiency and effectiveness by improving performance in tasks like math and reasoning without relying heavily on reinforcement learning.

Q: What are some concerns raised about OpenAI's models and their approach?

A: Concerns include the potential for releasing products with hidden flaws, drawing comparisons to Google's benchmark engineering through synthetic data. There are also doubts about precision in math capabilities and the effectiveness of the models.

Q: How does o3-mini perform in mathematical reasoning compared to other models?

A: Users reported that o3-mini excels in mathematical reasoning, solving difficult puzzles better than other models, sparking interest in mathematical reasoning competitions.

Q: What is the significance of Deep Research Assistant by OpenAI?

A: The Deep Research Assistant by OpenAI provides a tool for users to interact with the O3 model, offering research question refinements and a sidebar for tracking reasoning progress during queries, showcasing potential in information synthesis.

Q: How does Self-Other Overlap fine-tuning contribute to AI safety?

A: Self-Other Overlap (SOO) fine-tuning improves honesty in AI by aligning self-representation with perceptions of others, resulting in reduced deceptive responses across different model sizes without compromising task performance.

Q: What are some advancements in AI model training techniques mentioned?

A: Advancements like Self-Other Overlap fine-tuning and OpenEuroLLM launching EU-focused language models show progress in enhancing AI model training effectiveness and promoting alignment with ethical principles.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!